How to Install Spark SQL Thrift Server (Hive) and connect it with Helical Insight

In this article, we will see how to install Spark SQL Thrift Server (Hive) and how to fetch data from spark thrift server in helical insight

Prerequisite: Helical Insight should be installed and running.

We will now see how to install Spark and then run hive thrift server. (Windows 64 Bit )

- Download spark from the link (http://d3kbcqa49mib13.cloudfront.net/spark-2.1.0-bin-hadoop2.7.tgz) and extract tgz file in ‘C:’ drive.

- Hadoop winutils is also needed to run Spark in windows. Download hadoop winutils from link(http://rwthaachen.dl.osdn.jp/win-hadoop/62852/hadoop-winutils-2.6.0.zip) and extract zip file in ‘C’ drive

- Now we will set the HADOOP_HOME environment variable

- Go to my computers

- Click on Properties

- Click on advanced system settings

- Click on Environment variables

- Click on new

- Give variable name : HADOOP_HOME

- variable value : location of hadoop Example : C:\hadoop

- Click on OK

- click on path

- click on Edit

- add C:\hadoop at end of the path

- You need to run the following command to make the C:\tmp\hive read write and executable

- Run command in administrator mode and change directory to spark-2.1.0-bin-hadoop2.7\ spark-2.1.0-bin-hadoop2.7\bin. Administrator mode is required while installation. Otherwise you may get the error.

- Execute below command in command line Spark-submit –verbose –class org.apache.spark.sql.hive.thriftserver.HiveThriftServer2 –hiveconf hive.server2.thrift.port=10000 –driver-memory 512m –master=local

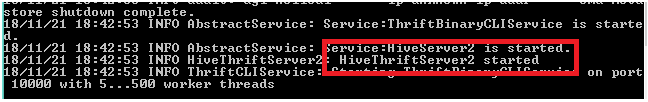

- Once you will get message HiveServer2 is started then execute beeline script which is present in spark-2.1.0-bin-hadoop2.7/bin folder

- Execute below command in command line for connecting to the server using JDBC url for your server

- Enter username and password of hive

- Once you get a message Connected to: Spark SQL. Now you can run queries for creating table and insert values to table

Example: winutils.exe chmod -R 777 C:\tmp\hive

C:\> cd spark-2.1.0-bin-hadoop2.7\ spark-2.1.0-bin-hadoop2.7\bin

Instead of 10000 give your hive port at highlighted portion

Example: C:\spark-2.1.0-bin-hadoop2.7\spark-2.1.0-bin-hadoop2.7\bin>beeline

!connect jdbc:hive2://localhost:10000

Example: beeline > !connect jdbc:hive2://localhost:10000

Example: 0: jdbc:hive2://localhost:10000> CREATE DATABASE `sampletraveldata`;

0: jdbc:hive2://localhost:10000> USE `sampletraveldata`;

0: jdbc:hive2://localhost:10000> CREATE TABLE `employee_details` (

`employee_id` int, `employee_name` String);

0: jdbc:hive2://localhost:10000> INSERT INTO TABLE `employee_details` VALUES

(1, 'Mike Cannon-Brookes');

0: jdbc:hive2://localhost:10000> select * from sampletraveldata;

We will now see how to fetch data from spark using below steps.

Below steps for create datasouce from spark hive thirft server in helical insight application:

- Go to Helical insight application -> Click on Default user or Default Admin

- Click on Datasource page and then look for hive datasource (using hive you can connect spark)

- Click on create option and enter your hive details as mentioned in below image then click on test connection if test connection is successful and then click on save datasource

- Click on create metadata action by using this datasource -> click on schema -> select tables and give right click -> click on Add to metadata -> Save metadata

- Click on Reports page -> click on connect to same metadata -> select same metadata -> give right click and click on use this metadata

- Drag column to selection area from selected table and generate report with available visualization types

This way helical insight BI tool is capable of connecting spark thrift server (hive) and fetching data from spark.